[ad_1]

More and far more solutions and services are having benefit of the modeling and prediction abilities of AI. This posting provides the nvidia-docker instrument for integrating AI (Synthetic Intelligence) software bricks into a microservice architecture. The major edge explored here is the use of the host system’s GPU (Graphical Processing Device) sources to speed up many containerized AI apps.

To realize the usefulness of nvidia-docker, we will begin by describing what kind of AI can benefit from GPU acceleration. Next we will existing how to implement the nvidia-docker resource. Lastly, we will explain what tools are accessible to use GPU acceleration in your apps and how to use them.

Why making use of GPUs in AI purposes?

In the discipline of synthetic intelligence, we have two primary subfields that are made use of: machine studying and deep finding out. The latter is part of a bigger relatives of device discovering approaches centered on artificial neural networks.

In the context of deep mastering, wherever operations are basically matrix multiplications, GPUs are much more productive than CPUs (Central Processing Units). This is why the use of GPUs has grown in the latest decades. In fact, GPUs are regarded as the heart of deep learning due to the fact of their massively parallel architecture.

Even so, GPUs are not able to execute just any plan. In truth, they use a distinct language (CUDA for NVIDIA) to consider gain of their architecture. So, how to use and talk with GPUs from your applications?

The NVIDIA CUDA technologies

NVIDIA CUDA (Compute Unified Device Architecture) is a parallel computing architecture mixed with an API for programming GPUs. CUDA translates software code into an instruction established that GPUs can execute.

A CUDA SDK and libraries these types of as cuBLAS (Basic Linear Algebra Subroutines) and cuDNN (Deep Neural Community) have been designed to connect conveniently and efficiently with a GPU. CUDA is offered in C, C++ and Fortran. There are wrappers for other languages including Java, Python and R. For instance, deep finding out libraries like TensorFlow and Keras are centered on these technologies.

Why applying nvidia-docker?

Nvidia-docker addresses the wants of builders who want to increase AI performance to their applications, containerize them and deploy them on servers powered by NVIDIA GPUs.

The goal is to set up an architecture that lets the development and deployment of deep learning products in solutions out there by using an API. Therefore, the utilization price of GPU sources is optimized by producing them accessible to various software scenarios.

In addition, we gain from the rewards of containerized environments:

- Isolation of occasions of every single AI product.

- Colocation of several versions with their unique dependencies.

- Colocation of the identical design underneath several variations.

- Reliable deployment of styles.

- Product efficiency checking.

Natively, working with a GPU in a container involves setting up CUDA in the container and offering privileges to accessibility the device. With this in head, the nvidia-docker instrument has been produced, allowing NVIDIA GPU products to be uncovered in containers in an isolated and protected manner.

At the time of creating this write-up, the most recent version of nvidia-docker is v2. This edition differs greatly from v1 in the adhering to techniques:

- Variation 1: Nvidia-docker is executed as an overlay to Docker. That is, to create the container you experienced to use nvidia-docker (Ex:

nvidia-docker operate ...) which performs the steps (between others the development of volumes) allowing to see the GPU devices in the container. - Edition 2: The deployment is simplified with the replacement of Docker volumes by the use of Docker runtimes. Without a doubt, to launch a container, it is now required to use the NVIDIA runtime via Docker (Ex:

docker run --runtime nvidia ...)

Notice that thanks to their various architecture, the two variations are not compatible. An application written in v1 will have to be rewritten for v2.

Location up nvidia-docker

The expected elements to use nvidia-docker are:

- A container runtime.

- An offered GPU.

- The NVIDIA Container Toolkit (most important portion of nvidia-docker).

Prerequisites

Docker

A container runtime is expected to run the NVIDIA Container Toolkit. Docker is the encouraged runtime, but Podman and containerd are also supported.

The formal documentation presents the set up course of action of Docker.

Driver NVIDIA

Drivers are needed to use a GPU system. In the case of NVIDIA GPUs, the motorists corresponding to a presented OS can be obtained from the NVIDIA driver down load webpage, by filling in the data on the GPU design.

The installation of the drivers is finished by way of the executable. For Linux, use the next instructions by replacing the title of the downloaded file:

chmod +x NVIDIA-Linux-x86_64-470.94.run

./NVIDIA-Linux-x86_64-470.94.runReboot the host device at the conclude of the installation to take into account the set up motorists.

Installing nvidia-docker

Nvidia-docker is obtainable on the GitHub task page. To set up it, adhere to the installation guide based on your server and architecture particulars.

We now have an infrastructure that enables us to have isolated environments providing accessibility to GPU methods. To use GPU acceleration in programs, several resources have been made by NVIDIA (non-exhaustive record):

- CUDA Toolkit: a established of instruments for developing computer software/courses that can perform computations utilizing both equally CPU, RAM, and GPU. It can be applied on x86, Arm and Electric power platforms.

- NVIDIA cuDNN](https://developer.nvidia.com/cudnn): a library of primitives to accelerate deep finding out networks and enhance GPU functionality for significant frameworks these types of as Tensorflow and Keras.

- NVIDIA cuBLAS: a library of GPU accelerated linear algebra subroutines.

By utilizing these tools in software code, AI and linear algebra tasks are accelerated. With the GPUs now seen, the software is equipped to ship the information and operations to be processed on the GPU.

The CUDA Toolkit is the cheapest amount alternative. It offers the most regulate (memory and recommendations) to establish custom purposes. Libraries deliver an abstraction of CUDA performance. They permit you to emphasis on the application development instead than the CUDA implementation.

The moment all these elements are applied, the architecture applying the nvidia-docker provider is prepared to use.

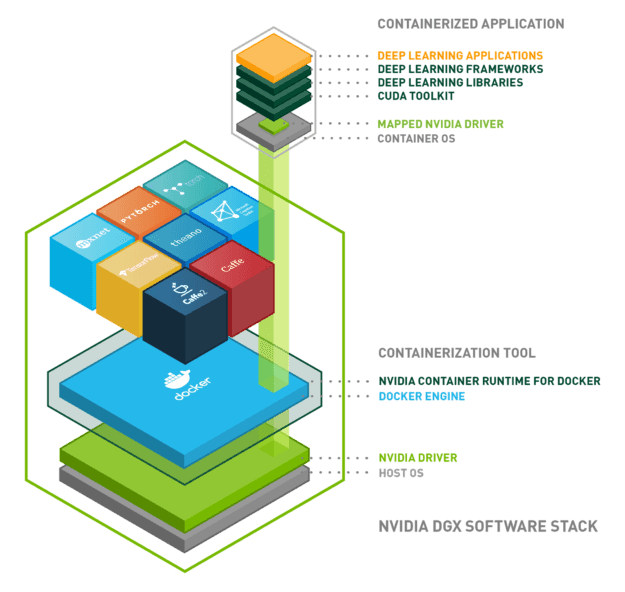

In this article is a diagram to summarize every little thing we have viewed:

Conclusion

We have set up an architecture making it possible for the use of GPU assets from our purposes in isolated environments. To summarize, the architecture is composed of the next bricks:

- Running procedure: Linux, Windows …

- Docker: isolation of the atmosphere working with Linux containers

- NVIDIA driver: set up of the driver for the components in concern

- NVIDIA container runtime: orchestration of the prior a few

- Applications on Docker container:

- CUDA

- cuDNN

- cuBLAS

- Tensorflow/Keras

NVIDIA carries on to establish applications and libraries all over AI technologies, with the intention of establishing by itself as a chief. Other technologies might complement nvidia-docker or may possibly be much more acceptable than nvidia-docker depending on the use circumstance.

[ad_2]

Supply url